cross-posted from: https://lemmy.sdf.org/post/12950329

Actually the original is from StackOverflow

I think it’s older than that. Well, parts of it are.

Though I adore the addition of the fade to chaos in the 2009 Stack Overflow post, I recall seeing the exact early text from that answer linked and re-pasted on older CS forums as early as 2000. It needed posted a lot, shortly after XHTML became popular.

Part of the contextual humor of the 2009 SO answer, is it takes the trend of the previous answers - getting longer and less coherent each time it needed posted - and extended it to it’s logical conclusion.

I do think the Stack Overflow post is the definitive version.

I wish I could find the original, or something closer, because we could compare and measure our collective loss of sanity.

deleted by creator

I don’t think that using regex to basically do regex stuff on strings that happen to also be HTML really counts as parsing HTML

deleted by creator

My regex at work is full of (<[^>]+\s*){0,5} because we don’t care about 90 percent of the attributes. All we care is it’s class=“data I want” and eventually take me to that data.

Technically, regex can’t pull out every link in an HTML document without potentially pulling fake links.

Take this example (using curly braces instead of angle brackets, because html is valid markdown):

{template id="link-template"} {a href="javascript:void(0);"}link{/a} {/template}That’s perfectly valid HTML, but you wouldn’t want to pull that link out, and POSIX regex can’t really avoid it. At least not with just a single regex. Imagine a link nested within like 3 template tags.

deleted by creator

I would argue that that is not parsing. That’s just pattern matching. For something to be parsing a document, it would have to have some “understanding” of the structure of the document. Since regex is not powerful enough to correctly “understand” the document, it’s not parsing.

Putting a statement into old calligraphy is nice. But it all boils down to because I said so. If you’re going to go to that effort you might as well put the rationale for why it can’t possibly parse the language into the explanation rather than because I said so

The section about “regular language” is the reason. That’s not being cheeky, that’s a technical term. It immediately dives into some complex set theory stuff but that’s the place to start understanding.

English isn’t a regular language either. So that means you can’t use regex to parse text. But everyone does anyway.

Huh? Show me the regex to parse the English language.

Parsing text is the reason regex was created!

Page 1, Chapter 1, “Mastering Regular Expressions”, Friedl, O’Reilly 1997.

" Introduction to Regular Expressions

Here’s the scenario: you’re given the job of checking the pages on a web server for doubled words (such as “this this”), a common problem with documents sub- ject to heavy editing. Your job is to create a solution that will:

Accept any number of files to check, report each line of each file that has doubled words, highlight (using standard ANSI escape sequences) each dou- bled word, and ensure that the source filename appears with each line in the report.

Work across lines, even finding situations where a word at the end of one line is repeated at the beginning of the next.

Find doubled words despite capitalization differences, such as with The the, as well as allow differing amounts of whitespace (spaces, tabs, new- lines, and the like) to lie between the words.

Find doubled words even when separated by HTML tags. HTML tags are for marking up text on World Wide Web pages, for example, to make a word bold: it is <b>very</b> very important

That’s certainly a tall order! But, it’s a real problem that needs to be solved. At one point while working on the manuscript for this book. I ran such a tool on what I’d written so far and was surprised at the way numerous doubled words had crept in. There are many programming languages one could use to solve the problem, but one with regular expression support can make the job substantially easier.

Regular expressions are the key to powerful, flexible, and efficient text processing. Regular expressions themselves, with a general pattern notation almost like a mini programming language, allow you to describe and parse text… With additional sup- port provided by the particular tool being used, regular expressions can add, remove, isolate, and generally fold, spindle, and mutilate all kinds of text and data.

Chapter 1: Introduction to Regular Expressions "

There’s a difference between ‘processing’ the text and ‘parsing’ it. The processing described in the section you posted it fine, and you can manage a similar level of processing on HTML. The tricky/impossible bit is parsing the languages. For instance you can’t write a regex that’ll relibly find the subject, object and verb in any english sentence, and you can’t write a regex that’ll break an HTML document down into a hierarchy of tags as regexs don’t support counting depth of recursion, and HTML is irregular anyway, meaning it can’t be reliably parsed with a regular parser.

For instance you can’t write a regex that’ll relibly find the subject, object and verb in any english sentence

Identifying parts of speech isn’t a requirement of the word parse. That’s the linguistic definition. In computer science identifying tokens is parsing.

That’s certainly one level of parsing, and sometimes alk you need, but as the article you posted says, it more usually refers to generating a parse tree. To do that in a natural language isn’t happening with a regex.

None of these examples are for parsing English sentences. They parse completely different formal languages. That it’s text is irrelevant, regex usually operates on text.

You cannot write a regex to give you for example “the subject of an English sentence”, just as you can’t write a regex to give you “the contents of a complete div tag”, because neither of those are regular languages (HTML is context-free, not sure about English, my guess is it would be considered recursively enumerable).

You can’t even write a regex to just consume

<div>repeated exactly n times followed by</div>repeated exactly n times, because that is already a context-free language instead of a regular language, in fact it is the classic example for a minimal context-free language that Wikipedia also uses.None of these examples are for parsing English sentences.

Read it again:

“At one point while working on the manuscript for this book. I ran such a tool on what I’d written so far”

The author explicitly stated that he used regex to parse his own book for errors! The example was using regex to parse html.

You can’t even write a regex to just consume <div> repeated exactly n times followed by </div> repeated exactly n times,

Just because regex can’t do everything in all cases doesn’t mean it isn’t useful to parse some html and English text.

It’s like screaming, “You can’t build an Operating system with C because it doesn’t solve the halting problem!”

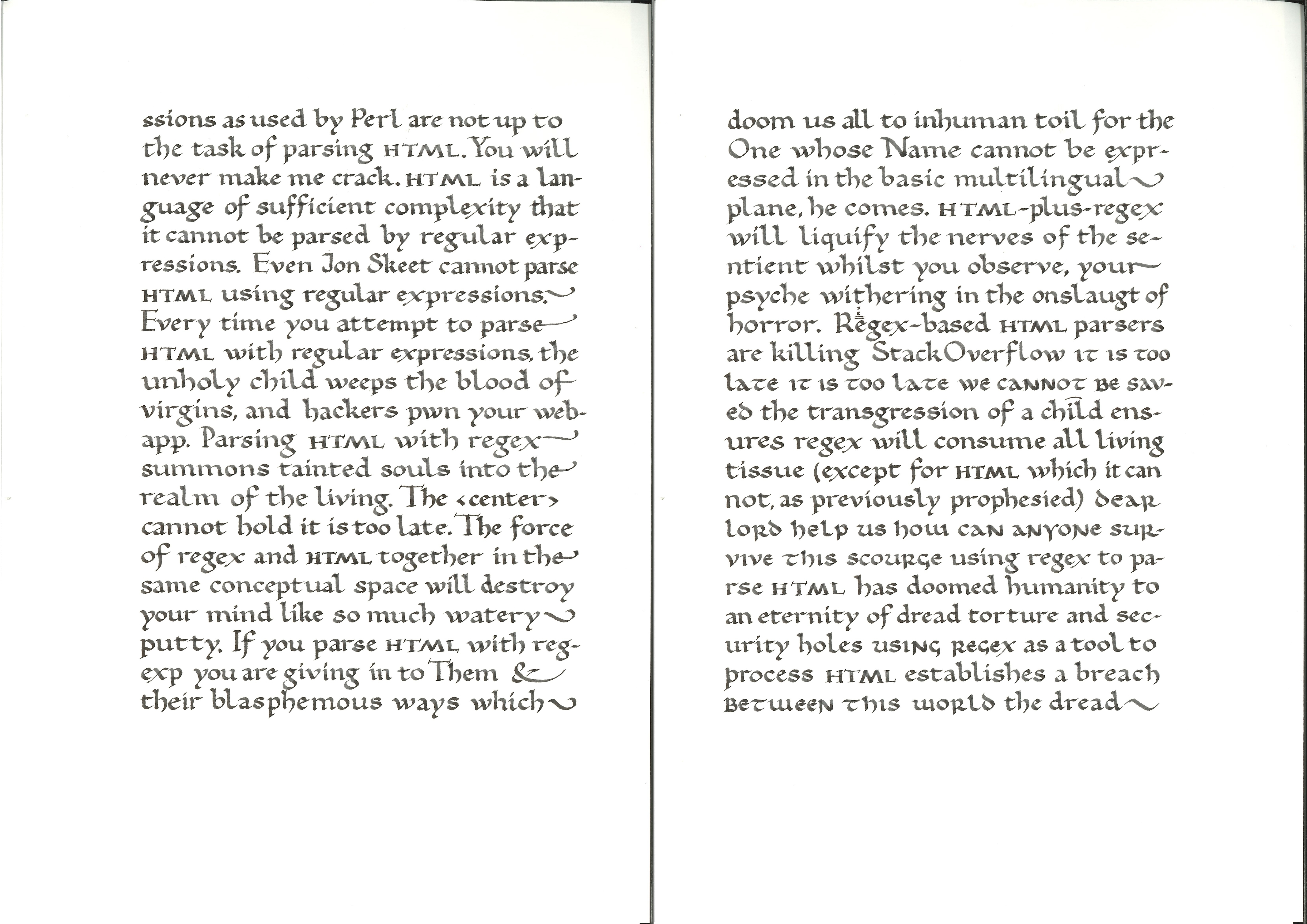

The text does technically give the reason on the first page:

It is not a regular language and hence cannot be parsed by regular expressions.

Here, “regular language” is a technical term, and the statement is correct.

The text goes on to discuss Perl regexes, which I think are able to parse at least all languages in

LL(*). I’m fairly sure that is sufficient to recognize XML, but am not quite certain about HTML5. The WHATWG standard doesn’t define HTML5 syntax with a grammar, but with a stateful parsing procedure which defies normal placement in the Chomsky hierarchy.This, of course, is the real reason: even if such a regex is technically possible with some regex engines, creating it is extremely exhausting and each time you look into the spec to understand an edge case you suffer 1D6 SAN damage.

https://en.m.wikipedia.org/wiki/Regular_language

HTML is famously known for not being a regular language. An explanation isn’t required, you can find many formal proofs online (indeed, a junior year CS student should be able to write a proof after their DS/algo/automata classes).

This very old post is funny because despite it being so famously known as being irregular, stack overflow questions kept popping up asking how to use regular expressions to parse HTML, which you can’t do.

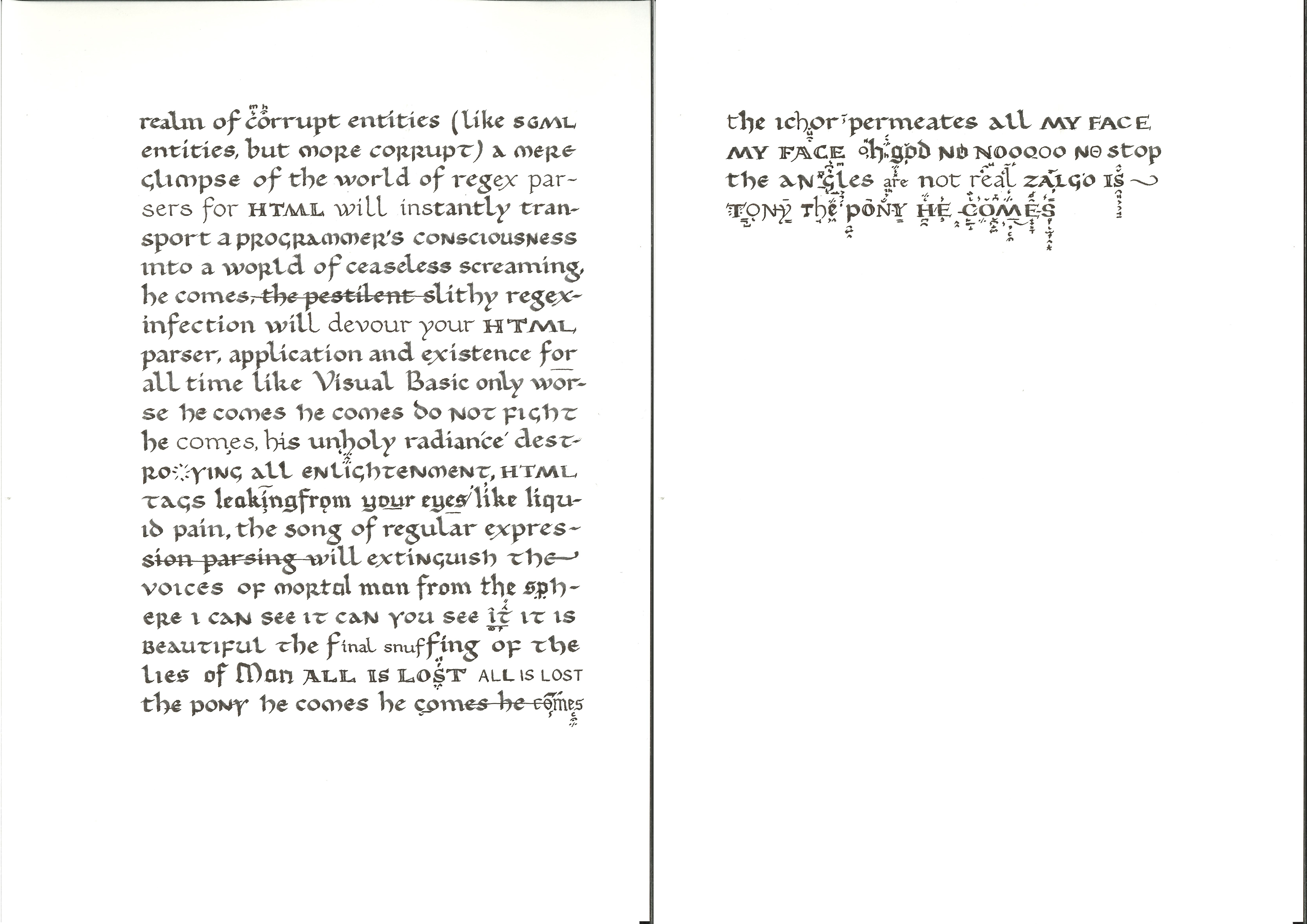

I appreciate the Zalgo calligraphy in particular.

Actually, you can’t even parse html (5) with specialized tools or by converting it and then using xml linters (they quit out due to too many errors). Only tools capable of reliably parsing html (mostly) are the big 3 browser engines. Experience from converting saved webpages to asciidoctor, it involves cleaning up manually, despite tidy and pandoc.

This isn’t true. HTML5 made a very strict set of rules and there are a large handful of compliant parsers. But yes, you absolutely can’t use an XML parser. You can’t even use an XML emitter, as you can emit valid XML that means something completely different in HTML.

…what a fucking disaster. I still wish XHTML won.

Real question, why? I feel like there’s a story there

Anyone else read that in the Monty Python holy hand grenade style?

Xhtml is right out!

I thought it was a font at first but it looks like actual calligraphy.

It’s a personal variant of Foundational script, with uncial and a modernized textura when it starts to derail into zalgo-ness, written with a Lamy Joy 1.9mm nib.

I am so DOM-ed up that I have to wear a ball-gag

Zalgo is Tony the Pony.

~he comes~

he comes

he comes

h̵̝̖͂e̵͖͈͐ ̷̛̪̗̜̥̓̉́c̸̜͎̬̦̦̀̀̀͛̚o̴̳͙͂m̷̲̓͋͆́̃ȇ̷̯̞͚̹͗̉͝ͅs̵̹͔̜͕̖̅͗̂͌̀

Not knowing it was impossible, he got there and did it!

I tried using regex to parse xmls files once. Then i found this post and realized xml was even worse than html

XHTML be like “Lemme intorduce myself”

can anyone recommend a tool to parse xml?

Microsoft transaction SQL version 1 newer than what we have.

We had to write our own xml parser. And yes it sucks.

XSLT =P

The Chomsky hierarchy is a fucking myth. HTML isn’t a formal language but its context free? GTFO, next you’ll be telling me the earth isn’t flat.