I usually ask AI to summarize it and then I get a pretty good idea of what it was meant to do. It’s just another tool to me. AI generated code sucks but it’s nice when it’s a quick summary.

- 0 Posts

- 25 Comments

I run a personal dnsmasq just for dns resolving/routing. It integrates well with Networkmanager. Easy to work with and very reliable to have the DNS resolution and routing be handled by dnsmasq. Single command to reload NetworkManager which also reloads the integrated dnsmasq. I like it and it offers a lot of control for me. I hate having to use the hosts file for when I am connecting to labs via VPN with their own network. dnsmasq is way better at handling subdomains than the hosts file and it feels way more reliable than just hoping the minimal DNS routing system works properly.

I know this is a day old and most people who would have seen this already have moved on, but this is a simple fix. In fact if you have secure boot enabled, the Nvidia driver installation will detect it and start the signing process. If you don’t have secure boot enabled, then it will skip it. I think having secure boot enabled and properly signing your drivers is good to not end up in that situation again. Though I understand how annoying it can be too. Sigh

I have a little flash chip reader and backed up my bios and can flash it on the laptop. Even modified it to unlock the advanced menus Lol

But th rm rf thing didn’t nuke it so I guess I’m safe either way

So what you are saying it takes after it’s Mom and Dad went out to get milk and hasn’t returned in years?

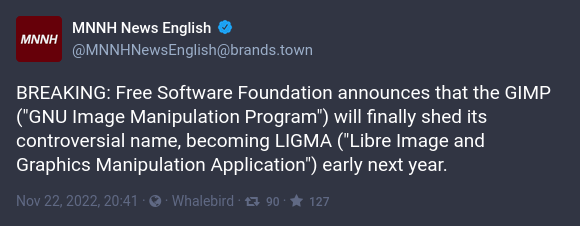

More edgier than LIGMA, apparently lol

Well, it seemed from your comment that you just expected this to work without tinkering. However, now you admit to be tinkering? This is a rather confusing story. When I’m tinkering, I’m exploring and expecting to run into edge cases or unsupported environments. Linux may be great, but it’s just a kernel with GNU on top to help build the larger OS. I believe the attitude towards linux is a bit misguided. It is a great tool, and its strengths mainly lie in the freedom of usage that allows for both fine-tune control and automatibility. I say windows and MacOS are strictly non automatable environments unless you venture into the developer side, and that will undoubtedly bring some with it some problems. As such, many systems that require the user to be more hands off and operate with high uptime will use Linux kernels. Being able to automate the process with minimal user input is essential in the performance and reliability of critical systems demand.

Again, I did not wish to be condemning your actions and rather alert you to the differing problems these tools are made to solve. MacOs and thereby its hardware was geared towards being an apple only product that is only properly supported by apple, and the problem it solves is to be a tool for rich and self-conscious individuals.

Windows was created to be a home and enterprise OS that can be used in almost any system that is quite an outstanding feat, but it really is because of the number of developers and users offer the ability for things to work. Mind you that even Windows was not made to be extremely automatable. yet there are tools being created to offer automating tasks, but many are closed source and tied to requiring funding. I even ran into some odd issues every once in a while.

Linux was expressly made to be a minimal system that offered high uptime and high automatibility that was free for everyone to contribute or use. This allows users and admins to set up their systems to be more hands-off when it came to tasks that were extremely time-consuming or continually have to be worked on without deadline while keeping costs low. It is just recently that Linux-based distributions are able to make use of features and packages that are geared to users who need to make manual tasks. Wayland is finally being more stable, driver support from large manufacturers, and even emulation of Windows APIs with use of proton/wine is getting better. Thus offering users the ability to do manual tasks and mix custom made automated scripts/tools into their environments.

Many see the hype and equate it to being able to use Linux systems like they did with the very much well funded manual systems that Windows and MacOS offered. Instead, Linux is just a tool and can be useful when it is needed.

You tried to install a non apple approved software(being the entire OS) on a Mac system. Imagine how hard it is for linux developers to support this blackbox hardware configuration?

Try using something actually easier to program/use for running linux type OSes. I usually will suggest AMD.

If you need a strong graphics card on a laptop, I think those frameworks will be more than capable of offering that kind of flexibility. The potential of packing it up so that if you feel like the power-hungry gpu will take too much battery, then it can be flexible in allowing you to remove the gpu without thinking about a screwdriver

If you need ARM, then you should be mindful of the fact that the arm ecosystem is still quite new for pc users. There are not many software choices, but it does show some promise.

If you think you need Mac hardware, then you don’t need to go around throwing linux on it. MacOS is already Unix like. You are going to live with the fact that no one outside of apple will have proper hardware support at the OS level. Let alone driver support.

Well I think it was pretty toxic how people hit you with downvotes but reflecting on it, your comment was not meant for this thread.

I do believe with a bit more info and some images, your post can be a completely new thread. I don’t know what fill tiling is or whatever that scripted WM could mean. I like your counterargument against DEs that focus on the dual interaction of mouse and keyboard that emphasizes the cursor control(inclusive of tablets and touchscreens)

I firmly believe Linux currently is much more powerful than the other major OSes is due to the fact that it can be heavily automated. So if you do end up making a thread on your DE, let me know

It’s like saying automatic transmission is worse than manual transmission.

Yes, but I usually add my public key to the authorized_keys file and turn off password authentication once i do login with a password. On top of that, I have a sshpass one line command that takes care of this for me. It’s much easier than trying to manually type a password for the next time. I save it and just run it every time I think about using password login. Next time I need to ssh, I know the password login is not necessary.

sshpass -p ‘PASSWORD’ ssh USER@IP.ADDRESS “echo ‘`cat ~/.ssh/id_rsa.pub`’ > ~/.ssh/authorized_keys && echo ‘Match User !root

PasswordAuthentication no

Match all’ > /etc/ssh/sshd_config’ && exit” && ssh USER@IP.ADDRESSAt the next reboot, your system will now only accept key logins, except for root. I hope the root user password is secure. I don’t require it for root because if a hacker does gain shell access, a password(or priv esc exploit) is all they need to gain root shell. It is also a safety net in case you need to login and lost your private key.

Me too, I sometimes wish to read a larger wall of text, BUT only if there are proper categories and examples. Not just hypothetical and abstract ideas and ruminations.

1·2 years ago

1·2 years agoYep, it’s one thing to have it, but you actually use a lot of os tools

While many can agree with a desktop environments importance, the desktop environment is rn closely tied to the distro’s philosophy. Many who venture outside the major distros will need to set up their own environment.

You are on Linux, obviously that fixed your problem. But yeah, the setting for faster wakeup from sleep is hidden somewhere, and Microsoft does not want that to be toggled off and may even ignore it, lol

Windows keeps the computer awake and does not do sleep like it used to anymore. S3 sleep, that is. Keeps wifi connected and all that jazz. Battery drain is significantly worse now.

I think it is because there is a setting about faster wakeup from sleep or something. I think it also keeps the wifi connected or awake on laptops.

7·2 years ago

7·2 years agoThis is why KVM/QEMU with virtio drivers are massively helpful in using windows specific software without needing to dualboot on short notice. Proton also helps run many games on Linux, which is Windows only. Too bad the biggest strength is also a weakness. It’s just a pain to set up and figure out problems that will happen from inexperience

Yeah, this is the way, doing it manually is fun and all but its highly unnecessary extra effort as there are very little reasons as all of it is just configuration of the system. Archinstall is just the Text-GUI version that still offers the customize ability of the install. Heck you can load a configuration file that you can make before hand so that you don’t need to babysit the installation and can reproduce it in other systems/PCs.

Glibc’s qsort will default to either insertion sort mergesort or heapsort. Quicksort itself is used when it cannot allocate extra memory for mergesort or heapsort. Insertion sort is still used in the quicksort code, when there is a final 4 items that need to be sorted.

Normally it is simply mergesort or heapsort. Why I know this? Because there was a recent CVE for quicksort and to reproduce the bug I had to force memory to be unable to be allocated with a max size item. It was interesting reading the source code.

That is if you are not on a recent version of qsort which simply removed quicksort altogether for the mergesort + heapsort

Older version still had quicksort and even some had insertion sort. Its interesting to look at all the different versions of qsort.