[…] [Hyprland] is made by a transphobe and a large part of the community is also […]

Do you have a source?

All of this user’s content is licensed under CC BY 4.0.

[…] [Hyprland] is made by a transphobe and a large part of the community is also […]

Do you have a source?

Just looks like windows to me […]

That’s because Windows copied KDE Plasma, obviously. /j

What specific features are you looking for?

Can you ping the Jellyfish server from the laptop? Can any other device access the Jellyfish server?

I use KDE Plasma on my desktop and GNOME on my laptop — though, by my experience, GNOME has been mildly annoying. I just find it too “restrictive” when compared with KDE. I’m also not super fond of how some apps seem to integrate rather poorly with GNOME. I do think that GNOME’s interface works well with a laptop, but the UX hasn’t been the best for me. I have few, if any, complaints regarding KDE.

I will preface by saying that I am not casting doubt on your claim, I’m simply curious: What is the rationale behind why it would be so unlikely for such an exploit to occur? What rationale causes you to be so confident?

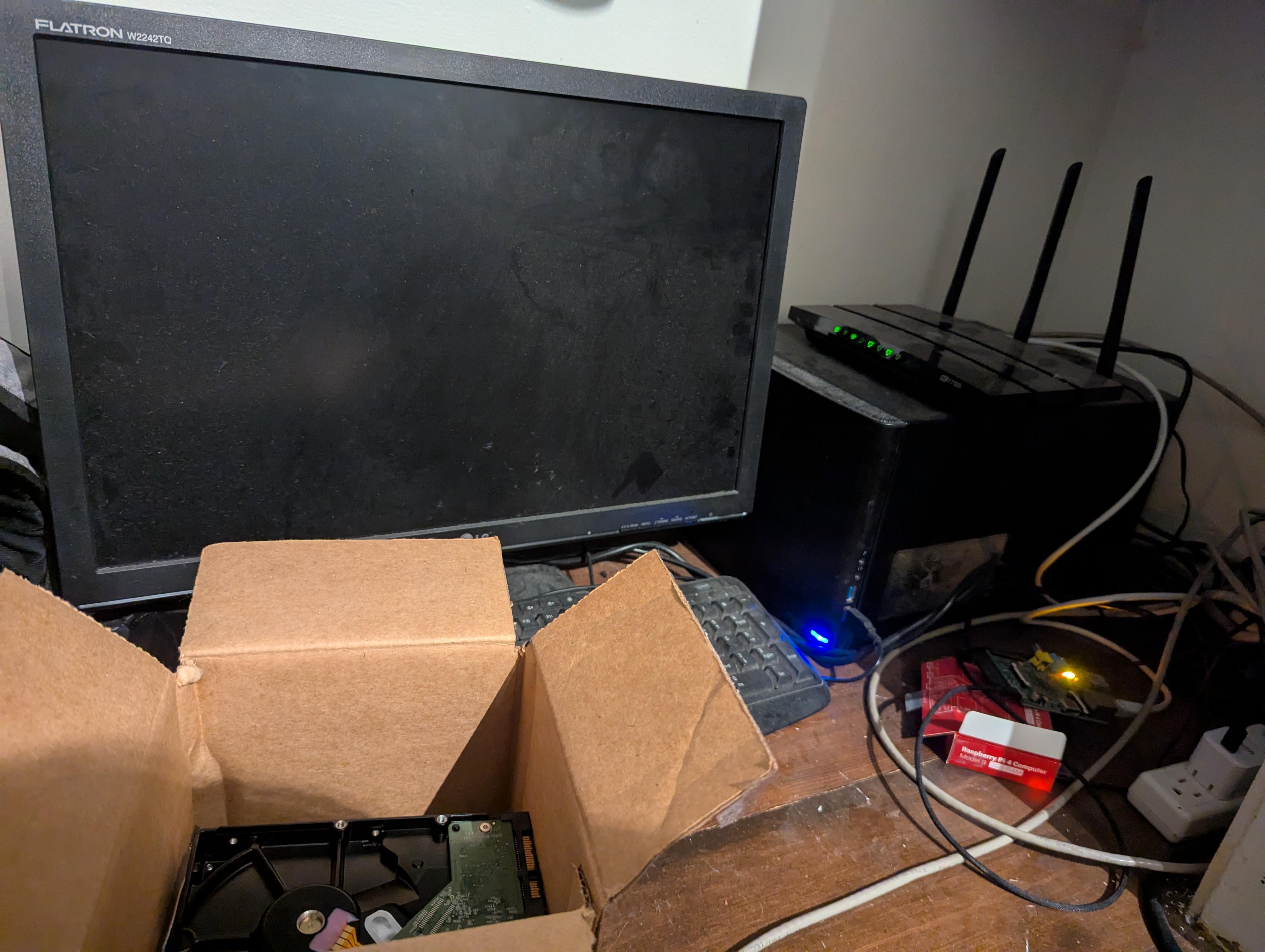

Looks like a Fractal Node 304?

Yep! I’ve found that the case is possibly a little too cramped for my liking — I’m not overly fond of the placement of the drive bay hangars — but overall it’s been alright. It’s definitely a nice form factor.

It wasn’t a deliberate choice. It was simply hardware that I already had available at the time. I have had no performance issues of note as a result of the hardware’s age, so I’ve seen no reason to upgrade it just yet.

For clarity, I’m not claiming that it would, with any degree of certainty, lead to incurred damage, but the ability to upload unvetted content carries some degree of risk. For there to be no risk, fedi-safety/pictrs-safety would have to be guaranteed to be absolutely 100% free of any possible exploit, as well as the underlying OS (and maybe even the underlying hardware), which seems like an impossible claim to make, but perhaps I’m missing something important.

“Security risk” is probably a better term. That being said, a security risk can also infer a privacy risk.

Yeah, that was poor wording on my part — what I mean to say is that there would be unvetted data flowing into my local network and being processed on a local machine. It may be overparanoia, but that feels like a privacy risk.

You’re referring to using only fedi-safety instead of pictrs-safety, as was mentioned in §“For other fediverse software admins”, here, right?

One thing you’ll learn quickly is that Lemmy is version 0 for a reason.

Fair warning 😆

One problem with a big list is that different instances have different ideas over what is acceptable.

Yeah, that would be where being able to choose from any number of lists, or to freely create one comes in handy.

create from it each day or so yo run on the images since it was last destroyed.

Unfortunately, for this usecase, the GPU needs to be accessible in real time; there is a 10 second window when an image is posted for it to be processed [1].

[…]

- fedi-safety must run on a system with GPU. The reason for this is that lemmy provides just a 10-seconds grace period for each upload before it times out the upload regardless of the results. [1]

[…]

Probably the best option would be to have a snapshot

Could you point me towards some documentation so that I can look into exactly what you mean by this? I’m not sure I understand the exact procedure that you are describing.

please touch my-doc🥺👉👈