But they are not running debian sid

Eskuero

I like sysadmin, scripting, manga and football.

- 63 Posts

- 265 Comments

5·7 days ago

5·7 days agoGlad I bought drives in december. As long as they dont break, should hold me easily into 2030

Yes I do. I cooked a small python script that runs at the end of every daily backup

import subprocess import json import os # Output directory OUTPUT_DIR = "/data/dockerimages" try: os.mkdir(OUTPUT_DIR) except: pass # Grab all the docker images. Each line a json string defining the image imagenes = subprocess.Popen(["docker", "images", "--format", "json"], stdout = subprocess.PIPE, stderr = subprocess.DEVNULL).communicate()[0].decode().split("\n") for imagen in imagenes[:-1]: datos = json.loads(imagen) # ID of the image to save imageid = datos["ID"] # Compose the output name like this # ghcr.io-immich-app-immich-machine-learning:release:2026-01-28:3c42f025fb7c.tar outputname = f"{datos["Repository"]}:{datos["Tag"]}:{datos["CreatedAt"].split(" ")[0]}:{imageid}.tar".replace("/", "-") # If the file already exists just skip it if not os.path.isfile(f"{OUTPUT_DIR}/{outputname}"): print(f"Saving {outputname}...") subprocess.run(["docker", "save", imageid, "-o", f"{OUTPUT_DIR}/{outputname}"]) else: print(f"Already exists {outputname}")

26 tho this include multi container services like immich or paperless who have 4 each.

One basic SMS ER/HP/CRIT build for Nefer team to replace Aino 😇

And one cope HP/HP/HP on 2PC/2PC build to boost the C2 buff on C6 Chiori 😈

I am NOT farming VV again. She will inherit some pieces from Ineffa once my clanker gets on the new set.

1·2 months ago

1·2 months agoThe Pi can play hw accec h265 at 4k60fps

9·2 months ago

9·2 months agoecho 'dXIgbW9tCmhhaGEgZ290dGVtCg==' | base64 -d

From the thumbnail I though linus was turned into a priest

I run changedetection and monitor the samples .yml files projects usually host directly at their git repos

1·3 months ago

1·3 months agoBring back my computer as well

3·3 months ago

3·3 months agopeople say go back in time to pick the correct lotto number

I say go back in time and sell my 8TB disk for 80 billion

2·3 months ago

2·3 months agoLysithea ready to blast all church

“we would welcome contributions to integrate a performant and memory-safe JPEG XL decoder in Chromium. In order to enable it by default in Chromium we would need a commitment to long-term maintenance.”

yeah

For the price of the car I would expect the SSD drive to grow wheels and be able to actually drive it

2·3 months ago

2·3 months agogpt-oss:20b is only 13GB

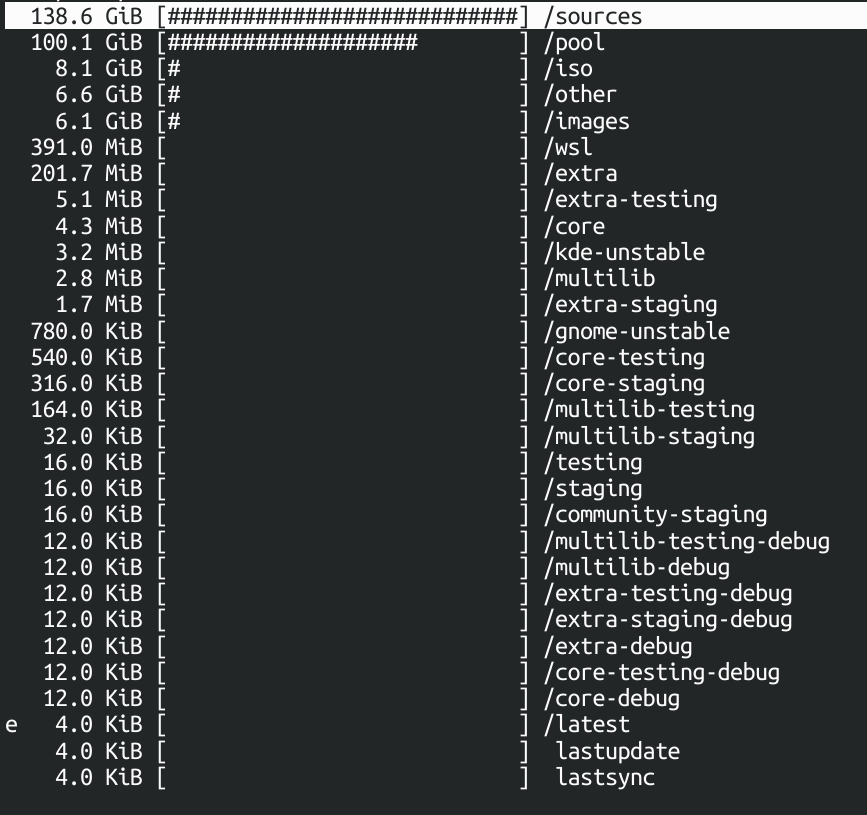

This includes everything for a total of 261G

mb i was too busy running arch to watch it btw